AI legos: the Bittensor thesis

composable compute's first killer app?

Disclosure: Omnichain Capital does not have a position in TAO, but may in the future.

A few months ago I published “Compute legos” (link), which discussed the novel capabilities that composable general compute could power. This article will focus specifically on the implications of composable machine learning (ML), the vision behind the Bittensor network (TAO). Before we jump into the protocol, lets explore why it exists in the first place.

Current state of Artificial Intelligence (AI):

Researchers have been developing modern AI since the 1930s, but recent breakthroughs in data, compute, and algorithm technologies have led to an explosion in progress, specifically in generative AI (deep learning models that can produce various types of content including text, imagery, audio and synthetic data without being explicitly programmed to do so).

The most significant breakthrough came in 2017 when a team at Google Brain created the transformer, a deep learning model initially used for language translation. However, researchers soon discovered its groundbreaking potential to power AI generated text and images using large language models (LLMs), which companies like OpenAI and Google have leveraged in their products that are sweeping the globe today.

Market opportunity:

The most popular generative AI product today is ChatGPT, a supercharged chatbot built on-top of OpenAI’s GPT-4 LLM. More impressive than its ability to have human-like conversations on any topic, is its seemingly limitless potential as a general-purpose automation tool, especially with third-party API integrations. Generative AI has the potential to significantly augment digital capabilities across all industries and end-markets.

With the potential for such a deep and broad impact, AI’s economic market opportunity is enormous. AI and ML tech are directly estimated to become $1.6T and $305B markets by 2030, respectively, and PwC expects AI’s total impact on global GDP to be $15T by 2030.

(Precedence Research)

AI’s mechanics and limitations today:

I asked ChatGPT to explain how deep learning works (like I’m five):

In short, training these models requires a lot of data and enormous amounts of processing power that today is primarily only accessible to large corporations and research institutions. According to OpenAI, the cost of training a single AI model can range from $3 million to $12 million. The cost of training a model on a large dataset can be even higher, reaching up to $30 million. As a result, generative AI has been concentrated within a handful of large players to-date, resulting in permissioned & siloed models, limiting development efficiency and model functionality.

Siloed AI models cannot learn from each other, and are therefore non-compounding (researchers must start from scratch each time they create new models). This is in stark contrast to AI research, where new researchers can build on the work of past researchers, creating a compounding effect which supercharges idea development.

Siloed AI is also limited in functionality since third-party application & data integrations require permission from the model owner (in the form of technology partnerships & business agreements). Limited functionality = limited value; after all, AI is only as valuable as the utility it can power.

Could crypto be a solution?

Enter Bittensor:

Bittensor was founded in 2019 by two AI researchers, Ala Shaabana and Jacob Steeves, who were searching for a way to make AI compoundable. They soon realized crypto could be the solution — a way to incentivize and orchestrate a global network of ML nodes to train & learn together on specific problems. Incremental resources added to the network increase overall intelligence, compounding on work done by previous researchers & models.

Sound familiar? This is the same logic behind DeFi’s money legos architecture, which drove an explosion in innovation during the 2020 DeFi summer. From “Compute legos” (link):

Theoretically, with AI legos, Bittensor can scale its AI capabilities much faster and more efficiently than siloed models ever can.

How does it work?

No, this is not “on-chain ML”. Bittensor is a Parachain (app-chain) on Polkadot that can essentially be thought of as an on-chain oracle (network of Validators) connecting and orchestrating off-chain ML nodes (Miners). This creates a decentralized mixture-of-experts (MoE) network, a ML architecture where multiple models, optimized for different capabilities, are combined to create a stronger overall model.

The supply-side has two layers: AI (Miners) and blockchain (Validators).

On the demand-side, developers can build applications on top of Validators, leveraging (and paying for) use-case specific AI capabilities from the network.

Investment thesis & key risks:

Competitive landscape:

Within the web3 ML landscape, Bittensor’s closest competitors are Gensyn and Together, which offer decentralized ML compute. However, they do not orchestrate a MoE model, limiting their ability to compound AI. Bittensor’s composable ML architecture (AI legos) is unique in the market today.

(link to Messari report)

Valuation & upside:

The cleanest upside comparison is OpenAI, which recently received a $29B private market valuation from Microsoft, 40x higher than Bittensor’s current FDV. If the project is successful, however, its capabilities should far exceed OpenAI’s (compounding AI + more integrations), and therefore could be worth much more than $29B. Digging a bit deeper…

TAO is the network’s utility token, used to incentivize supply-side participation and as demand-side payment. It can also be used to delegate (stake) to specific validators (who can in turn bond to specific miners), so the token can also be thought of as a revenue share conduit. Therefore, TAO’s valuation can either be derived from the network’s utility (economic activity built on top) or direct cash flow to the protocol.

Utility is more abstract to value, so let’s focus on cash flow for now. Assuming the ML market can reach $305B by 2030 (Precedence Research), we can value the Bittensor network based on its potential 2030 market share and revenue multiple.

Returns look highly attractive across (what I believe to be) reasonable upside assumptions. Is a $100B+ valuation crazy? If compounding AI becomes a reality, and Bittensor becomes the most powerful ML model in the world, I think $100B will end up being much too conservative.

(based on TAO FDV as of 5/9/2023)

Either way you slice it, I have high confidence the token has significant upside if the project executes on its vision.

Writing is on the wall

As a web3 researcher and investor, I'm admittedly biased on the potential of decentralized networks. So what do those in web2 AI think? They see open-source disruption coming too…

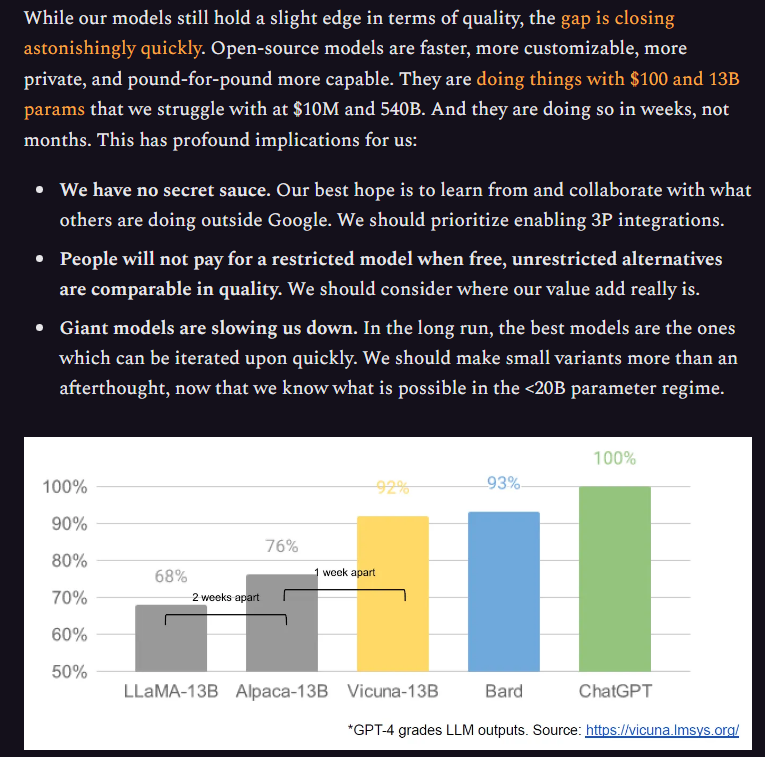

In a leaked internal report, a Google AI engineer admits both they and OpenAI (or any siloed model) don’t have competitive moats and that open source ML models will soon surpass their capabilities.

(link to the document, recommend reading in full form)

Moreover, OpenAI’s CEO Sam Altman recently admitted future incremental AI improvements will not come from large models — but rather incremental adjustments:

Given high costs and diminishing returns, even well funded corporations are having issues scaling their ML technology. AI legos seem like a logical solution to drive the next generation of machine intelligence.

Materials on Bittensor are sparse and highly technical, but I did my best to distill down the basic long-term vision. As always, I’d love to hear feedback from members of the Bittensor community or web2 AI world!

This is a great article! Only correction is that Bittensor is *not* a polkadot parachain, it is a standalone L1 blockchain running using the Polkadot Substrate SDK.

Great article, never seen such an in-depth and understandable explanation of Bittensor